Deploying and Using the Charliecloud Run Mechanism

Note

Support for Charliecloud as a run mechanism within Jacamar CI is a feature introduced in release v0.25.0. If you encounter any issues or have suggestions we would appreciate the feedback.

Jacamar CI is excited to support the use of Charliecloud to run jobs within

target containers while remaining in the user’s namespace. This is all done

while preserving support for existing executor types (e.g., shell and

flux). To understand how this functions it is first important to review

that every job Jacamar CI runs is based upon a script/environment/arguments

provided by the GitLab

Custom Executor.

The user application jacamar (traditionally ran after jacamar-auth has

authorized the job and dropped permissions) combines the runner generated

script with commands derived from the executor type and passes them to a

clean Bash login shell. The new Charliecloud run_mechanism modifies

this process to optionally leverage a ch-run command when users

provides an image in their Job:

With this new workflow we can ensure that the runner generated scripts are

always executed within the defined container while still potentially

submitting them to the desired scheduling system. The new mechanism also

handles the distinct runner generated stages (see

official docs)

by ensuring an administratively defined runner image is used where appropriate,

mounting all required volumes to preserve stateful information, and

automating registry credential management with the CI_JOB_TOKEN. The

resulting command would look something like:

/usr/bin/ch-run \

--write-fake \

--bind='<data-dir>/builds/ci-example_123/000' \

--bind='<data-dir>/cache/ci-example_123' \

--bind='<data-dir>/scripts/ci-example_123/000' \

'<data-dir>/scripts/ci-example_123/000/image.sqfs'

-- '<data-dir>/scripts/ci-example_123/000/build_script.bash'

Configuration

Note

The Charliecloud run_mechanism is only observed with the jacamar

application and is not utilized during any authorization steps.

It relies on an existing user namespace application

and all supporting configurations already established for your

specific environment.

[general] - Table

Key |

Description |

|---|---|

|

Defines a proposed mechanism to execute all runner generated scripts rather than simply relying on the user’s existing Bash shell. Use of this is dictated by the individual mechanism, for example with |

|

Requires the defined mechanism is used for all jobs, ignoring user setting/behaviors that normally trigger the usage. |

[general]

run_mechanism = "charliecloud"

force_mechanism = false

[general.charliecloud] - Table

Key |

Description |

Type |

|---|---|---|

|

Full path to the Charliecloud applications, used in constructing all commands. When not provided the application found in the users |

|

|

Helper image that will be used for standard runner manged actions (i.e., Git, artifacts, and caching). If not provided the host system will be used for these steps instead. |

|

|

Additional ch-run options that are used with the |

|

|

Override the default image pull-policy specifically for the |

|

|

This image is used when no user provided image is found (only observed when |

|

|

Additional ch-run options that are used in user’s job steps. |

|

|

Limits the use of containers to the step_script (user defined script combining the |

|

|

When defined only images that match this list of regular expressions will be allowed. |

|

|

Defines the prefix for a CI variable users can leverage to mount custom volumes at runtime. |

|

|

Allows for an admin defined script that is run during the |

|

|

Ignores any user defined arguments found in the |

|

|

Establishes the directory for setting the |

|

|

Prevents the use of ch-convert. |

|

Example

If you already have a functional Charliecloud deployment available you can add the following to your existing Jacamar CI configuration:

[general]

run_mechanism = "charliecloud"

[general.charliecloud]

# Allowing users to define their own mounted volumes is recommended but optional.

user_volume_variable = "CH_VOLUMES"

User dictated scripts (e.g., those from the before_script, script,

and after_script) will all be run using the image defined on the job

level. All other aspects of the job (e.g., Git, artifacts, and caching)

in this case will run on the host system.

To observe a simple example you can use the following job:

stages:

- host

- container

after_script:

- whoami

hello-host:

stage: host

script:

# This job runs on the host system because no image is provided.

- cat /etc/os-release

- date >> date.txt

artifacts:

paths:

- date.txt

hello-container:

stage: container

image: registry.access.redhat.com/ubi9/ubi-minimal:latest

# variables:

# Adding a variable like this will cause the string to be added to the

# --volume argument in the generated run command.

# CH_VOLUMES_0: ???:/example/scratch

script:

# Because we have defined an image our job will instead run using Charliecloud.

- cat /etc/os-release

# Artifacts are properly handled by the gitlab-runner on the host system

# and mounted into the container.

- cat date.txt

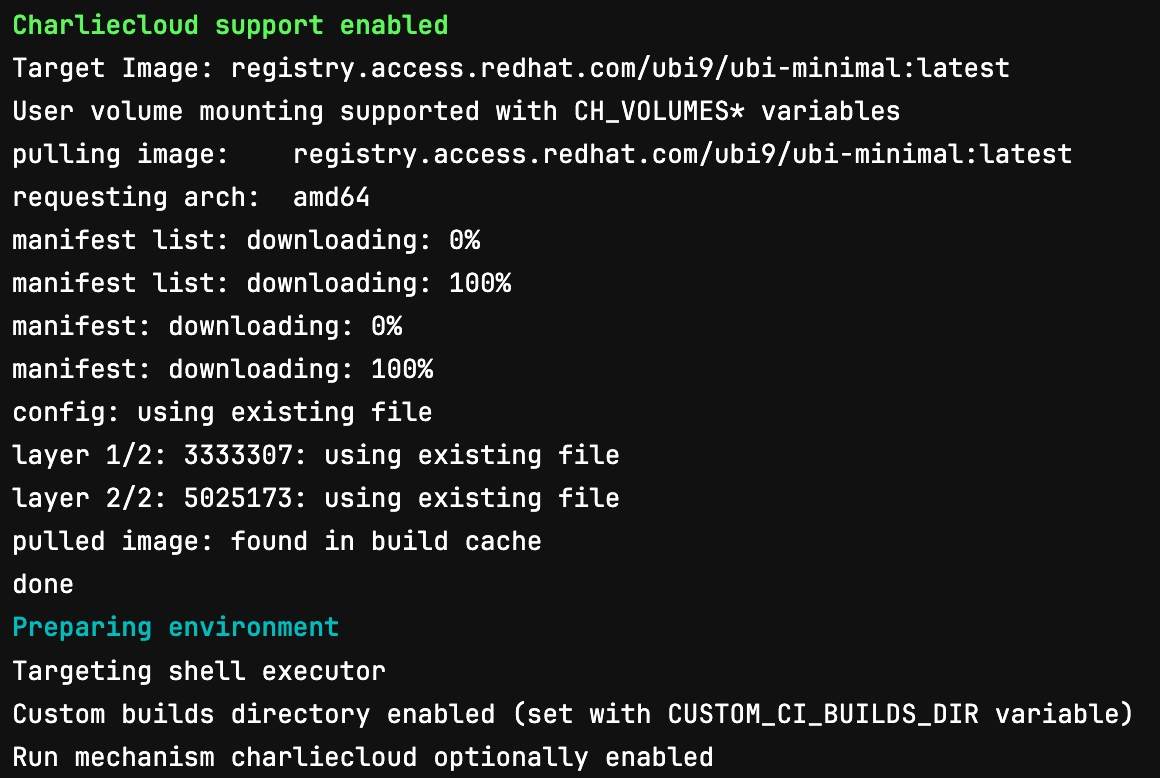

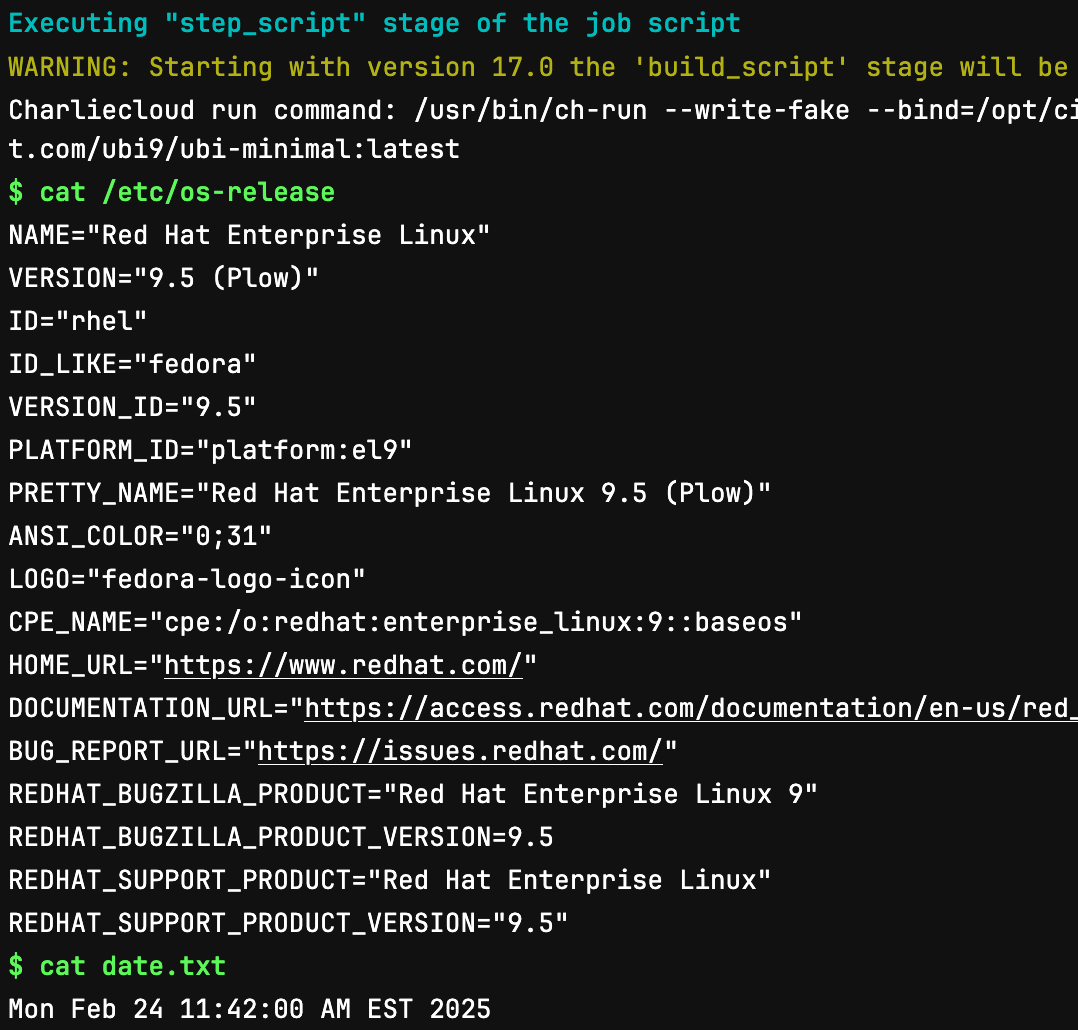

Examining the second job (which uses the Charliecloud mechanism) we can identify several important aspects:

We are still using a

shellexecutor, meaning the containers will run in the user’s environment on the machine the runner is hosted.In our test runner we still rely on

setuidto drop permissions and all aspects of the job observe this as you would expect. Noch-*commands are run until after authorization and downscoping have taken place.Build directories are still created in the defined

data_dirand mounted into the container at runtime following the same folder paths.During the

prepare_execstage the user defined image will be pulled and potentially archived based upon theexecutorconfiguration (i.e, batch executor converts images to a SquashFS in order to guarantee the are available on the compute resources).

Finally, we can clearly see that the user’s script is running in our UBI9 image and due to the correct mappings, features like artifacts/caching will be made available with no additional efforts required.

Custom Arguments

Unless disabled by configuration you can provide custom arguments to the ch-run command:

job:

variables:

JACAMAR_CI_CHARLIECLOUD_ARGS: "--set-env=FILE"

script:

- make test

Note that it is up to the user to ensure that these arguments do not conflict

with any defaults required to realize a successful CI/CD job. To help with

this the full ch-run ... command is always printed to the job log.

Defer to the official Charliecloud documentation for details on all options.

Authentication

As defined in the

authentication documentation

Charliecloud does not use configuration files, instead relies upon the

CH_IMAGE_PASSWORD and CH_IMAGE_USERNAME environment variables.

The priority of authentication options are as follows:

If user provided CI/CD variables are detected these are relied upon.

If the target image is from the same GitLab registry as the CI job itself we automatically inject the

CI_JOB_TOKEN.For all other cases, no authentication is provided.

SquashFS

By default we will utilize ch-convert in order to generate

a SquashFS archive of the image when using a batch executor. This

default behavior can be modified through configuration but care

should be given to ensure the new image_storage_dir is

accessible by the target compute resources:

[general.charliecloud]

disable_convert = true

image_storage_dir = "/target/dir"

Note

We are interested in improvements to how we manage/store converted images between jobs. However, at this time these flattened images will be removed automatically upon completion of the job.